Initial Access Operations Part 2: Offensive DevOps

The Challenge

As stated in PART 1 of this blog, the Windows endpoint defense technology stack in a mature organization represents a challenge for Red Teamer initial access operations. For initial access operation success in a well-instrumented environment, we typically need to meet a minimum bar for artifact use, such that:

- The generated software artifact is unique to evade any static analysis detection.

- Windows event tracing is squelched in user mode artifact execution.

- Kernel callback notifications are either “not interesting” or, even better, are disabled.

- Artifact API use is not interesting enough to trigger some dynamic NTDLL API hooking behavior.

- Parent child process relationships are not interesting from a process tree analysis perspective.

- Any process thread creation is preferably backed by some signed/legitimate disk (DLL module) artifact.

- Sandbox execution will force our artifact to abort/terminate immediately.

- Any kernel level exploitation cannot use a driver on the block list.

While the above is easy to write, the actual software/malware development techniques to achieve the goals are less trivial. To be fully effective, we need an ability to dynamically recompile malware artifacts to do things like changing the artifact size and entropy, use unique encryption keys, re-process incoming shellcode, provide the ability to introduce operator configurable options, artifact signing, and more. All these items point directly to employing a continuous integration/continuous development (CICD) approach to achieve a level of continuous defense evasion for produced artifacts. We employed the CICD feature set of GitLab Community Edition for implementing this approach. The following describes a generic approach I call, “Malware As A Service (MAAS)” and introduces a public repository as a template that you might employ to achieve similar goals.

Gitlab CICD Pipelines

The GitLab CICD pipeline is driven by an easily human digestible YML file syntax which can be enabled by putting a file named “.gitlab-ci.yml” in the home directory of a GitLab repository.

- The YML syntax allows us to define build stages into the pipeline with different jobs associated with each stage.

- Any single pipeline run can be triggered by a “git commit” and push or merge action for the repository.

- The YML syntax allows us to define trigger actions which subsequently allows for dynamically created child pipelines.

- Any child pipeline is simply another YML file being triggered by the parent/global YML configuration.

Gitlab Runners

A GitLab runner is software installed on a standalone server or virtual container that uses the GitLab API to poll a repository for pipeline jobs to be executed. When it first starts, a GitLab runner will register itself with the API, thus allowing it to subsequently service any pipeline jobs that are sent to it.

The runner will process instructions in the GitLab YML file, which, in most cases, will include the processing of a script indicated by the script tag in the YML content. The script is commonly executed by the native operating system “shell,” in the case of Windows, this might be CMD.EXE, and in the case of Linux, this might be /bin/bash or similar. The “shell” used is configurable and could be, for example, set to “pwsh.exe” or “pwsh” if you wanted a common shell syntax across platforms.

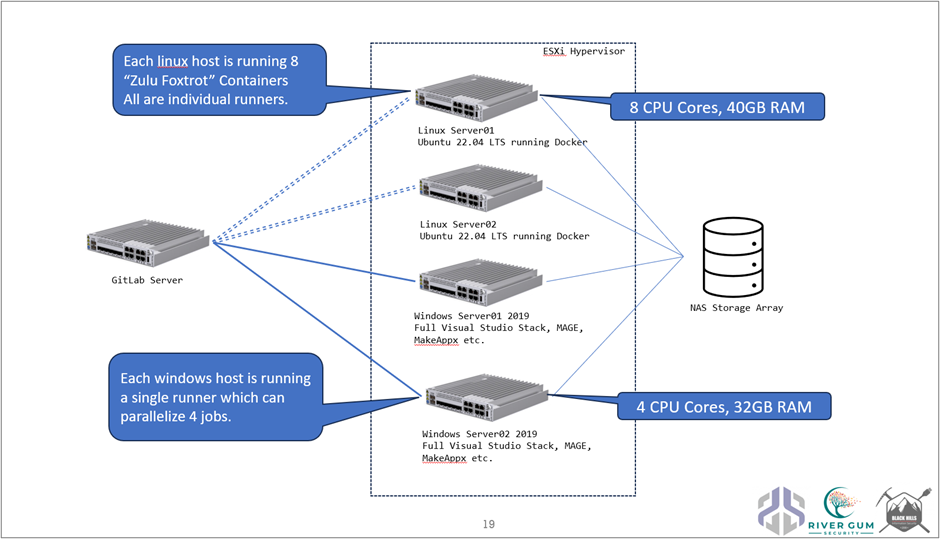

Based on our software compilation needs, we chose to deploy both Windows-based runners, and Linux-based runners, with PowerShell configured on the Windows systems and the default /bin/bash shell configured on Linux runners, which we chose to deploy within Docker containers.

The specific architecture choice for using Docker was driven largely by the set of Python scripts which generate dynamic pipeline configuration information. Docker essentially eases the versioning and change control burden within the overall environment.

In our case, we do require full stack Microsoft compilation tools, as well as Linux-based mingw-gcc, Golang, Rust, and a full mono/.NET installation. This necessitated the deployment of both Windows- and Linux-based runner architecture with shared storage. The diagram below is our version 1.0 architecture.

The astute observer will notice that Docker is deployed on both Linux hosts, but not deployed in the Windows architecture case. While it is possible to run Docker on the Windows platform, at the time of this first version of architecture, there was a requirement to pick between HyperV Windows container mode or Linux container mode, and it simply became easier to deploy these servers in their native O/S form. You will also notice that this entire architecture is contained within an ESXi Hypervisor, which, in the future, will be scaled up and replicated for redundancy.

Artifact Generation Docker Containers

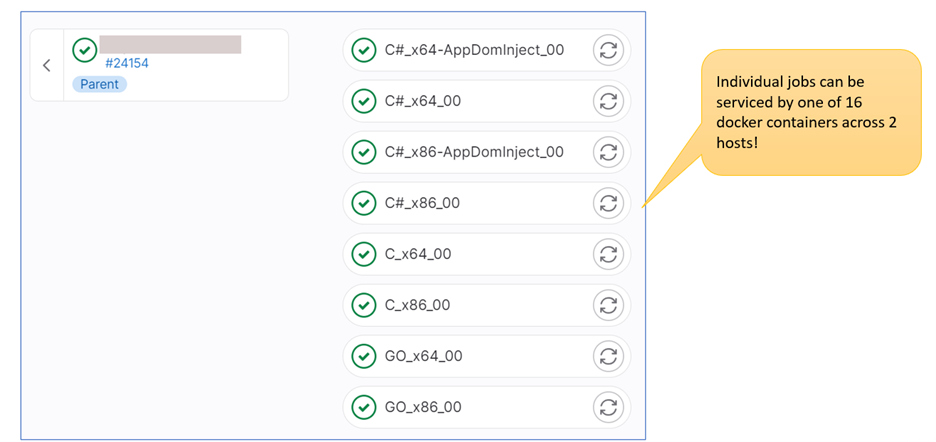

Using Docker swarm and shared storage in the backend allows us to deploy dedicated Docker containers for dynamic malware generation. We currently have two different types of containers, one of which is a Python-based framework for compiling C#, Golang, and C/C++ source code, and a second which is dedicated to the Rust language and compiler.

As a part of the C#/.NET framework container system, we have deployed modules to perform source code obfuscation, various evasion techniques such as ETW and AMSI bypass patching, and code to perform PE/COFF fixes as needed, such as recalculation of PE header checksums, for example.

CI/CD Pipeline Execution

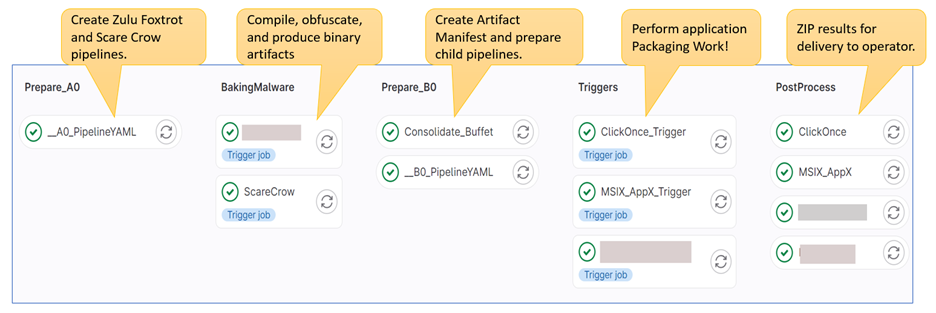

The below diagram is captured from GitLab directly, showing various stages of pipeline execution. There are early preparation stages, artifact generation stages, dynamic child pipeline trigger stages, and post processing activities listed. Parts of the CI/CD pipeline perform dedicated tasks such as MSIX/AppX and ClickOnce package generation from existing malware artifacts, for example.

Pipeline job parallelization is achieved via both GitLab runner configuration options and multiple Docker container registration in the overall architecture. One of the dedicated containers uses the idea of resource script consumption, which allows us to split compilation jobs by architecture and compilation language. There are, of course, other ways to parallelize runner execution jobs, depending on the way that dynamic child pipeline YML configuration is generated.

GitLab Runner YML Files and Python Generation Scripts

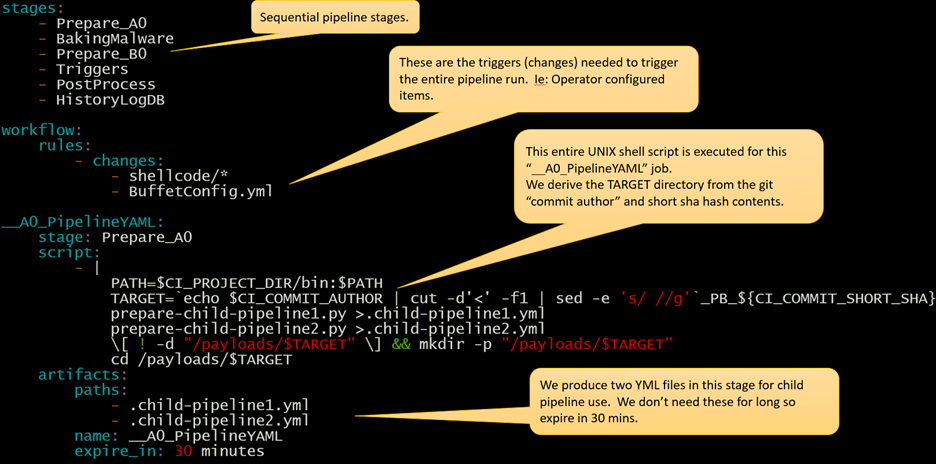

The master/parent pipeline script is contained in the “.gitlab-ci.yml” file, in the root directly of the repository. Within this file, dynamic child pipeline triggers are defined which call out to Python scripts used to generate more YML configuration for downstream stage execution of pipeline jobs. These generated YML file artifacts are passed down from earlier to later stages of the pipeline execution.

The diagram below is a snapshot of the master “.gitlab-ci.yml” file showing numerous aspects including pipeline stages, workflow rules for global pipeline triggering actions, and the initial preparation stage, which dynamically generates some YML for downstream stages.

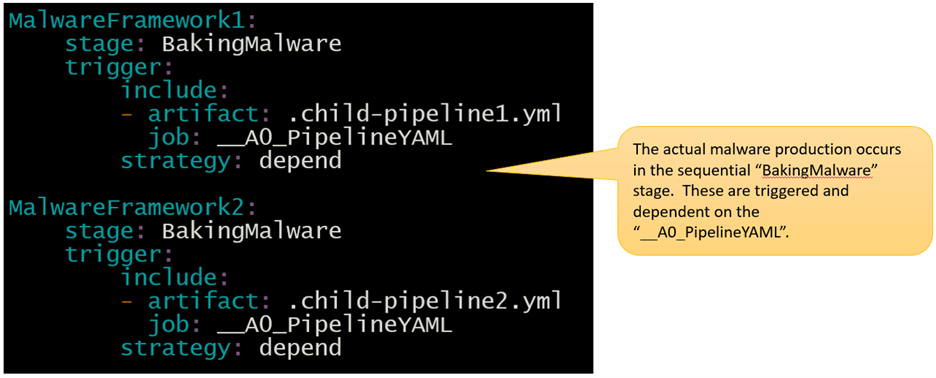

For completeness, I have also included a screenshot of the configuration section showing the dynamic trigger dependencies executed as part of the “BakingMalware” stage of the pipeline.

How to Operate the Malware Artifact Generation Pipeline

There are numerous potential inputs that a Red Team operator might need to supply. These might be anything from supplying shellcode itself, configurating various evasion switches, IP address information, URL’s and more.

We fondly refer to our internal pipeline as a Payload or Malware Buffet, and, as such, I provide a configuration file called “BuffetConfig.yml” which the operator must edit in order to subsequently push up the changes and trigger the pipeline execution. This particular YML file is processed by various scripts inside the pipeline execution and is distinctly different from the YML content that drives the pipeline itself.

To further enhance and standardize our configuration, the default parameters of this “BuffetConfig.yml” file are now represented within Python classes using Python “pydantic” models. This step allows us to move further towards automated triggering of this pipeline by other operational deployment software in our organization. On our roadmap is also to provide a web interface directly for configuration purposes.

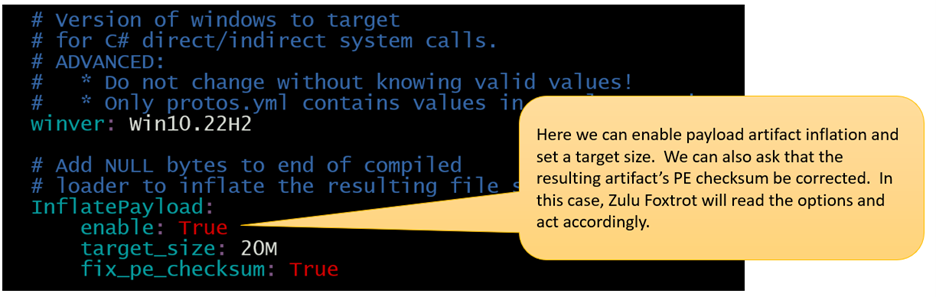

This diagram is an example of what a portion of this “BuffetConfig.yml” file contains. Note that “Zulu Foxtrot” is a reference to a malware artifact generation container.

Expected Results from a Pipeline Execution

When the pipeline is triggered and all of the stages have executed, we have — in post processing — stages which produce ZIP files of the generated artifacts to be used by Red Team operators. There will be multiple ZIP files containing items like the raw artifacts themselves (EXE, DLL, reflexive DLL’s, XLL’s and other desired files), manifest information, MSIX/AppX, ClickOnce, and other packaging methods.

Using a modest configuration with only one artifact generation container configurated, example results are as follows:

- 57 binary artifacts and HTML-based MANIFEST

- 19 EXE’s of both the Windows managed (.NET), and native unmanaged variety

- 26 DLL’s both managed and unmanaged

- 4 RDLL’s (reflexive native/unmanaged)

- 6 XLL’s (Excel DLLs)

- 9 MSIX/AppX packages.

- 9 Click Once packages.

- Over 1GB of total data content produced with a modest 20Mb artifact inflation (entropy reducer) configuration in place.

Metrics and Tracking

One of the last post processing stages of the pipeline uses a Python script which calculates various hashes (MD5, SHA1, and SHA256) of produced artifacts and writes the result into a SQLITE database with date/timestamp information for tracking purposes.

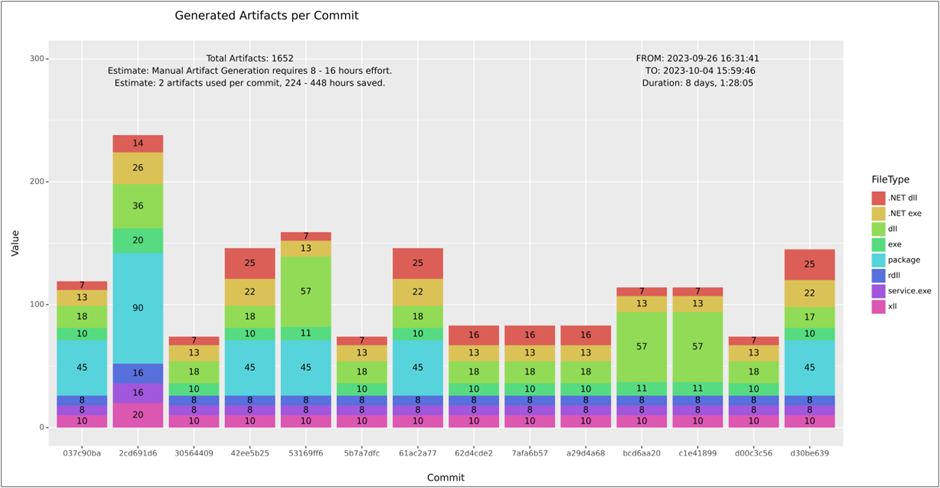

This data allows us to do numerous things, including producing summaries of artifact generation over time in graphical formal. The diagram below shows some artifact generation statistics from the pipeline during the month of September 2023.

Advantages of CI/CD Approach

Combined with the docker container frameworks, we can achieve automated unique binary artifact generation delivery on every single pipeline run. This includes a large diversity of artifact types with unique encryption key generation for any embedded artifacts, and unique source code obfuscation techniques.

The overall pipeline approach gives us flexibility to add or modify new malware development techniques in a systematic way and make them quickly available to Red Team operators. The pipeline also opens up opportunities for different forms of pre and post processing, whether this be further embedded shellcode encoding, for example, or in the form of post artifact repackaging. There is no doubt that this approach enables us to deliver defense bypass techniques that easily evade static artifact analysis phases, and instead move the burden up/raise the bar to behavioral analysis in artifact execution.

Disadvantages of CI/CD Approach

The pipeline configuration and execution has significant complexity. There are many software dependencies in the form of multiple language compilers, multiple different tools, and versioning concerns on the GitLab runners.

In addition, the speed of CI/CD pipeline troubleshooting is a challenge with an average pipeline run taking anywhere from 3 to 10 minutes. The current architecture is oversensitive to simple syntax errors in configuration input with an increasing level of Python script bloat to drive the dynamic elements.

Using “git” itself is not an ideal operator user interface, with a move towards a frontend web interface approach remaining on the roadmap to improve this aspect.

Lastly, the Red Team operators do suffer from a certain overwhelming factor due to the large diversity of artifacts produced. This could be phrased as a “too many toys to play with” sort of problem. Reminding operators to “Read the Manifest” is an aspect of this, however, there is potential in the future to introduce configuration presets to help in the uncertainty and overwhelming aspect of pipeline execution results.

Miscellaneous Penetration Tester Comments

After having achieved a degree of successful adoption of this technology, I decided to informally poll our penetration testers about this creation. I asked this question: “How many hours would it take you to manually produce the necessary evasive artifacts that you now get automatically from the malware buffet pipeline?”

The responses and ensuing discussion are summarized by these thoughts:

- We think this saves 8 – 16 hours of time per customer engagement.

- My development skills are limited, and this pipeline is an invaluable/critical resource for me.

- Before this existed, I would spend 2 days fumbling around on the internet and compiling various proof of concept (POC) code, just hoping that it would work for me.

- Your work has changed how I operate. I now focus a lot more on the engagement operation itself.

- Your work has brought a lot more consistency across our customer engagements.

Conclusion

It should be noted that the underlying technologies employed in the pipeline are many and varied, including both published projects online, as well as software written here at Black Hills Information Security. In the process of developing evasive malware artifacts, there are many techniques, and we stand on the shoulders of a great many people in our community willing to share research and resources.

From the CI/CD development perspective, I have put together a resource which should be viewed as a templated approach for standing up your own pipeline projects. It’s sort of a “paint by numbers” guideline effort which you can find here at: https://github.com/yoda66/MAAS

Notable tools and projects that went into many aspects of development include the following:

- WinDBG – yes, the Windows Debugger. Look for the MS app store version!

- System Informer

- SysInternals Process Explorer

- API Monitor

- MS Visual Studio, Mono, and Mingw-w64 cross compiler

- Python3 for many YML generation scripts

- PE-SIEVE

- DnSpy

- Resource Hacker

- Many different .NET Obfuscation Technologies and Projects

- SysWhispers3

In addition, there are many fine individuals and companies who are kind enough to share blogs and articles about various development and evasion techniques. Here is a list of various resources I have turned to at various times. Of course, given the nature of our work, published information is changing all the time. I hope you enjoyed reading this and gained some insight into techniques and methodology that you might choose to employ in your work also.

- https://docs.gitlab.com/ee/ci/

- https://defuse.ca/online-x86-assembler.htm

- https://www.blackhillsinfosec.com/avoiding-memory-scanners/

- https://www.elastic.co/fr/security-labs/upping-the-ante-detecting-in-memory-threats-with-kernel-call-stacks

- https://www.cobaltstrike.com/blog/behind-the-mask-spoofing-call-stacks-dynamically-with-timers

- https://jsecurity101.medium.com/uncovering-windows-events-b4b9db7eac54

- https://codemachine.com/articles/x64_deep_dive.html

- https://www.crowdstrike.com/blog/crowdstrikes-advanced-memory-scanning-stops-threat-actor/

- https://securityintelligence.com/x-force/direct-kernel-object-manipulation-attacks-etw-providers/

- https://www.mdsec.co.uk/2023/09/nighthawk-0-2-6-three-wise-monkeys/

READ: PART 1

Be sure to catch Joff’s webcast Exploring the Python “psutil” Module Under Windows TODAY at 1pm EST (Pre-show banter starts at 12:30pm). Recording available.

Find it here: https://www.youtube.com/live/_cTiTHZfewY

You can learn more straight from Joff himself with his classes:

Regular Expressions, Your New Lifestyle

Enterprise Attacker Emulation and C2 Implant Development

Available live/virtual and on-demand!